Automated Machine Learning and Optimization

Can we use Machine Learning techniques to improve Machine Learning processes themselves? Automated Machine Learning (AutoML) is about removing (some of) the human element from choosing ML parameters and methods. This gives rise to a difficult optimization problem where a single performance evaluation can take a long time, so fast convergence is desirable. Our group is therefore dealing with the following questions:

- How can we perform optimization as efficiently as possible when single function evaluations are expensive? We tackle this “expensive black box optimization problem” with “Model-Based Optimization”, sometimes called “Bayesian Optimization”, which itself relies on machine learning methods.

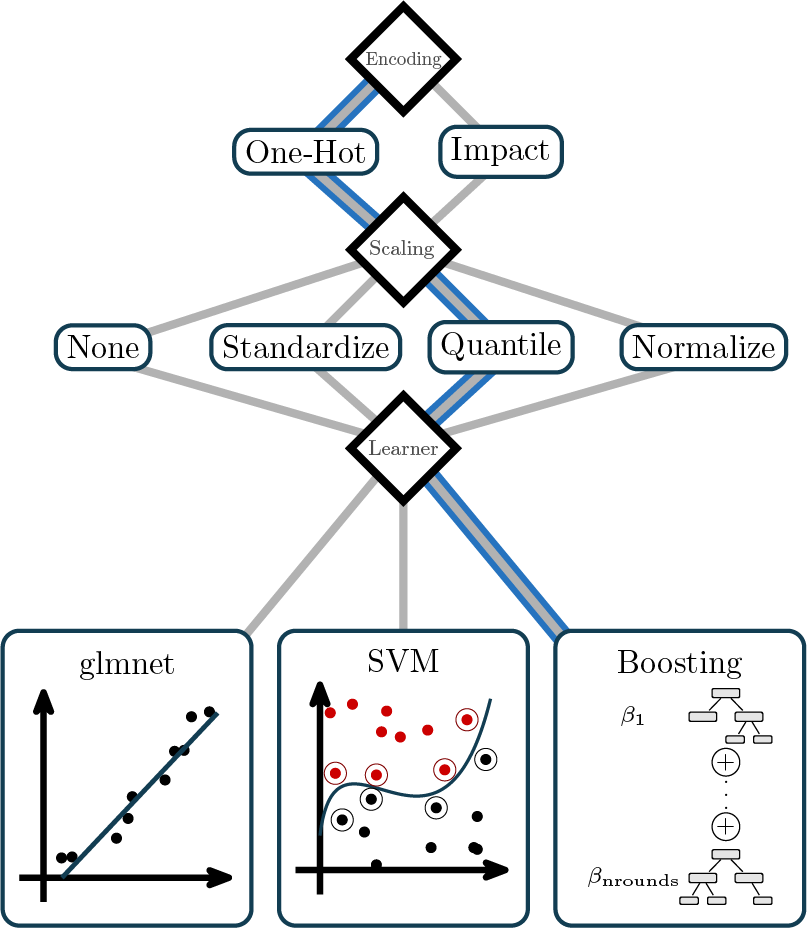

- How can we use optimization methods to automatically improve Machine Learning methods, given a problem at hand? For this, we may have to not only choose hyperparameters of a given Machine Learning algorithm, but instead choose this algorithm itself, in combination with possible preprocessing methods and/or methods of ensembling multiple algorithms.

- Does the AutoML method actually lead to better outcomes? A problem that arises when optimization (by machines or, implicitly, by humans!) is performed, is that the methods may perform well on the training data, but will generalize poorly when used in the wild. This not only arises when one algorithm is optimized on a training dataset and then performs worse on new unseen data, but even when researchers develop a method that works well on our benchmark datasets but fails to perform well when used for real-world applications. We therefore investigate ways to evaluate and compare AutoML methods on robust and meaningful benchmarks that tell us whether these methods are useful.

Members

| Name | Position | |||

|---|---|---|---|---|

| Prof. Dr. Matthias Feurer | Thomas Bayes Fellow / MCML | |||

| Salem Ayadi | PhD Student | |||

| Florian Karl | PhD Student | |||

| Martin Binder | PhD Student | |||

| David Rundel | PhD Student |

Projects and Software

- AutoML Benchmark: Reproducible Benchmarks for AutoML Systems.

- autoxgboost: Automatic Tuning and Fitting of XGBoost.

- autoxgboostMC: Multi-Objective Automatic Tuning and Fitting of XGBoost.

- miesmuschel: Mixed Integer Evolutionary Strategies

- mlr3automl: Automated Machine Learning with

mlr3. - mlr3hyperband: Multi-Armed Bandit Approach to Hyperparameter Tuning for

mlr3. - mlr3mbo: Model-based optimization with

mlr3. - mlrMBO: Model-based optimization with

mlr. - mosmafs: Multi-Objective Simultaneous Model and Feature Selection.

- YAHPO Gym: Benchmarking suite for HPO.

Publications

- Schneider L, Bischl B, Feurer M (2025) Overtuning in Hyperparameter Optimization 4th International Conference on Automated Machine Learning,

Link | PDF. - Benjamins C, Graf H, Segel S, Deng D, Ruhkopf T, Hennig L, Basu S, Mallik N, Bergman E, Chen D, Clément F, Feurer M, Eggensperger K, Hutter F, Doerr C, Lindauer M (2025) carps: A Framework for Comparing N Hyperparameter Optimizers on M Benchmarks. arXiv:2506.06143 [cs.LG].

arXiv | PDF | Code | Blog. - Rundel D, Sommer E, Bischl B, Rügamer D, Feurer M (2025) Efficiently Warmstarting MCMC for BNNs Workshop on Frontiers in Probabilistic Inference: Learning meets Sampling at ICLR 2025,

Link | PDF. - Zehle T, Schlager M, Heiß T, Feurer M (2025) CAPO: Cost-Aware Prompt Optimization 4th International Conference on Automated Machine Learning,

Link | PDF | arXiv | Code. - Lindauer M, Karl F, Klier A, Moosbauer J, Tornede A, Müller AC, Hutter F, Feurer M, Bischl B (2024) Position: A Call to Action for a Human-Centered AutoML Paradigm Proceedings of the 41st International Conference on Machine Learning, pp. 30566–30584. PMLR.

Link | PDF | arXiv. - Weerts H, Pfisterer F, Feurer M, Eggensperger K, Bergman E, Awad N, Vanschoren J, Pechenizkiy M, Bischl B, Hutter F (2024) Can Fairness be Automated? Guidelines and Opportunities for Fairness-aware AutoML. Journal of Artificial Intelligence Research 79, 639–677.

link|pdf. - Gündüz HA, Mreches R, Moosbauer J, Robertson G, To X-Y, Franzosa EA, Huttenhower C, Rezaei M, McHardy AC, Bischl B, Münch PC, Binder M (2024) Optimized model architectures for deep learning on genomic data. Communications Biology 7, 516.

link | pdf. - Karl F, Thomas J, Elstner J, Gross R, Bischl B (2024) Automated Machine Learning. In: In: Mutschler C , In: Münzenmayer C , In: Uhlmann N , In: Martin A (eds) Unlocking Artificial Intelligence: From Theory to Applications, pp. 3–25. Springer Nature Switzerland.

Link | PDF. - Bergman E, Feurer M, Bahram A, Balef AR, Purucker L, Segel S, Lindauer M, Hutter F, Eggensperger K (2024) AMLTK: A Modular AutoML Toolkit in Python. Journal of Open Source Software 9, 6367.

Link | PDF | Code. - Nagler T, Schneider L, Bischl B, Feurer M (2024) Reshuffling Resampling Splits Can Improve Generalization of Hyperparameter Optimization Advances in Neural Information Processing Systems, pp. 40486–40533.

Link | PDF | arXiv | Code | Conference Video | AutoML Seminar Video. - Gijsbers P, Bueno MLP, Coors S, LeDell E, Poirier S, Thomas J, Bischl B, Vanschoren J (2024) AMLB: an AutoML Benchmark. Journal of Machine Learning Research 25, 1–65.

link | pdf. - Segel S, Graf H, Tornede A, Bischl B, Lindauer M (2023) Symbolic Explanations for Hyperparameter Optimization. In: In: Faust A , In: Garnett R , In: White C , In: Hutter F , In: Gardner JR (eds) Proceedings of the Second International Conference on Automated Machine Learning, pp. 2/1–22. PMLR.

link. - Bischl B, Binder M, Lang M, Pielok T, Richter J, Coors S, Thomas J, Ullmann T, Becker M, Boulesteix A-L, Deng D, Lindauer M (2023) Hyperparameter Optimization: Foundations, Algorithms, Best Practices, and Open Challenges. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, e1484.

- Feurer M, Eggensperger K, Bergman E, Pfisterer F, Bischl B, Hutter F (2023) Mind the Gap: Measuring Generalization Performance Across Multiple Objectives. In: In: Crémilleux B , In: Hess S , In: Nijssen S (eds) Advances in Intelligent Data Analysis XXI. IDA 2023., pp. 130–142. Springer, Cham.

link|arXiv|pdf. - Prager RP, Dietrich K, Schneider L, Schäpermeier L, Bischl B, Kerschke P, Trautmann H, Mersmann O (2023) Neural Networks as Black-Box Benchmark Functions Optimized for Exploratory Landscape Features Proceedings of the 17th ACM/SIGEVO Conference on Foundations of Genetic Algorithms, pp. 129–139.

link | pdf. - Purucker L, Schneider L, Anastacio M, Beel J, Bischl B, Hoos H (2023) Q(D)O-ES: Population-based Quality (Diversity) Optimisation for Post Hoc Ensemble Selection in AutoML AutoML Conference 2023,

link | pdf. - Scheppach A, Gündüz HA, Dorigatti E, Münch PC, McHardy AC, Bischl B, Rezaei M, Binder M (2023) Neural Architecture Search for Genomic Sequence Data 2023 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), pp. 1–10.

- Schneider L, Bischl B, Thomas J (2023) Multi-Objective Optimization of Performance and Interpretability of Tabular Supervised Machine Learning Models Proceedings of the Genetic and Evolutionary Computation Conference, pp. 538–547.

link | pdf. - Karl F, Pielok T, Moosbauer J, Pfisterer F, Coors S, Binder M, Schneider L, Thomas J, Richter J, Lang M, Garrido-Merchán EC, Branke J, Bischl B (2023) Multi-Objective Hyperparameter Optimization in Machine Learning – An Overview. ACM Transactions on Evolutionary Learning and Optimization 3, 1–50.

- Moosbauer J, Binder M, Schneider L, Pfisterer F, Becker M, Lang M, Kotthoff L, Bischl B (2022) Automated Benchmark-Driven Design and Explanation of Hyperparameter Optimizers. IEEE Transactions on Evolutionary Computation 26, 1336–1350.

link | pdf. - Pfisterer F, Schneider L, Moosbauer J, Binder M, Bischl B (2022) Yahpo Gym – An Efficient Multi-Objective Multi-Fidelity Benchmark for Hyperparameter Optimization International Conference on Automated Machine Learning, pp. 3–1. PMLR.

link | pdf. - Schneider L, Pfisterer F, Kent P, Branke J, Bischl B, Thomas J (2022) Tackling Neural Architecture Search With Quality Diversity Optimization International Conference on Automated Machine Learning, pp. 9–1. PMLR.

link | pdf. - Schneider L, Pfisterer F, Thomas J, Bischl B (2022) A Collection of Quality Diversity Optimization Problems Derived from Hyperparameter Optimization of Machine Learning Models Proceedings of the Genetic and Evolutionary Computation Conference Companion, pp. 2136–2142.

link | pdf. - Schneider L, Schäpermeier L, Prager RP, Bischl B, Trautmann H, Kerschke P (2022) HPO X ELA: Investigating Hyperparameter Optimization Landscapes by Means of Exploratory Landscape Analysis Parallel Problem Solving from Nature – PPSN XVII, pp. 575–589.

link | pdf. - Moosbauer J, Casalicchio G, Lindauer M, Bischl B (2022) Enhancing Explainability of Hyperparameter Optimization via Bayesian Algorithm Execution. arXiv:2111.14756 [cs.LG].

link | pdf. - *Coors S, *Schalk D, Bischl B, Rügamer D (2021) Automatic Componentwise Boosting: An Interpretable AutoML System. ECML-PKDD Workshop on Automating Data Science.

link | pdf . - Gijsbers P, Pfisterer F, Rijn JN van, Bischl B, Vanschoren J (2021) Meta-Learning for Symbolic Hyperparameter Defaults. 2021 Genetic and Evolutionary Computation Conference Companion (GECCO ’21 Companion).

link. - Pfisterer F, Rijn JN van, Probst P, Müller A, Bischl B (2021) Learning Multiple Defaults for Machine Learning Algorithms. 2021 Genetic and Evolutionary Computation Conference Companion (GECCO ’21 Companion).

link | pdf. - Gerostathopoulos I, Plášil F, Prehofer C, Thomas J, Bischl B (2021) Automated Online Experiment-Driven Adaptation–Mechanics and Cost Aspects. IEEE Access 9, 58079–58087.

link | pdf. - Kaminwar SR, Goschenhofer J, Thomas J, Thon I, Bischl B (2021) Structured Verification of Machine Learning Models in Industrial Settings. Big Data.

link . - Binder M, Pfisterer F, Lang M, Schneider L, Kotthoff L, Bischl B (2021) mlr3pipelines - Flexible Machine Learning Pipelines in R. Journal of Machine Learning Research 22, 1–7.

link | pdf. - Schneider L, Pfisterer F, Binder M, Bischl B (2021) Mutation is All You Need 8th ICML Workshop on Automated Machine Learning,

pdf. - Moosbauer J, Herbinger J, Casalicchio G, Lindauer M, Bischl B (2021) Explaining Hyperparameter Optimization via Partial Dependence Plots. Advances in Neural Information Processing Systems (NeurIPS 2021) 34.

link | pdf. - Moosbauer J, Herbinger J, Casalicchio G, Lindauer M, Bischl B (2021) Towards Explaining Hyperparameter Optimization via Partial Dependence Plots 8th ICML Workshop on Automated Machine Learning (AutoML),

link | pdf. - Binder M, Pfisterer F, Bischl B (2020) Collecting Empirical Data About Hyperparameters for Data Driven AutoML Proceedings of the 7th ICML Workshop on Automated Machine Learning (AutoML 2020),

pdf. - Binder M, Moosbauer J, Thomas J, Bischl B (2020) Multi-Objective Hyperparameter Tuning and Feature Selection Using Filter Ensembles Proceedings of the 2020 Genetic and Evolutionary Computation Conference, pp. 471–479. Association for Computing Machinery, New York, NY, USA.

link | pdf. - Ellenbach N, Boulesteix A-L, Bischl B, Unger K, Hornung R (2020) Improved Outcome Prediction Across Data Sources Through Robust Parameter Tuning. Journal of Classification, 1–20.

link|pdf. - Sun X, Bommert A, Pfisterer F, Rähenfürher J, Lang M, Bischl B (2020) High Dimensional Restrictive Federated Model Selection with Multi-objective Bayesian Optimization over Shifted Distributions. In: In: Bi Y , In: Bhatia R , In: Kapoor S (eds) Intelligent Systems and Applications, pp. 629–647. Springer International Publishing, Cham.

link | pdf. - Pfisterer F, Thomas J, Bischl B (2019) Towards Human Centered AutoML. arXiv preprint arXiv:1911.02391.

link | pdf. - Pfisterer F, Coors S, Thomas J, Bischl B (2019) Multi-Objective Automatic Machine Learning with AutoxgboostMC. arXiv preprint arXiv:1908.10796.

link | pdf. - Sun X, Lin J, Bischl B (2019) ReinBo: Machine Learning pipeline search and configuration with Bayesian Optimization embedded Reinforcement Learning. CoRR.

link | pdf. - Probst P, Boulesteix A-L, Bischl B (2019) Tunability: Importance of Hyperparameters of Machine Learning Algorithms. Journal of Machine Learning Research 20, 1–32.

link | pdf. - Schüller N, Boulesteix A-L, Bischl B, Unger K, Hornung R (2019) Improved outcome prediction across data sources through robust parameter tuning. 221.

link | pdf. - Gijsbers P, LeDell E, Thomas J, Poirier S, Bischl B, Vanschoren J (2019) An Open Source AutoML Benchmark. CoRR.

link | pdf. - Rijn JN van, Pfisterer F, Thomas J, Bischl B, Vanschoren J (2018) Meta Learning for Defaults–Symbolic Defaults NeurIPS 2018 Workshop on Meta Learning,

link | pdf. - Kühn D, Probst P, Thomas J, Bischl B (2018) Automatic Exploration of Machine Learning Experiments on OpenML. arXiv preprint arXiv:1806.10961.

link | pdf. - Thomas J, Coors S, Bischl B (2018) Automatic Gradient Boosting. ICML AutoML Workshop.

link | pdf. - Cáceres LP, Bischl B, Stützle T (2017) Evaluating Random Forest Models for Irace Proceedings of the Genetic and Evolutionary Computation Conference Companion, pp. 1146–1153. Association for Computing Machinery.

link|pdf. - Bischl B, Richter J, Bossek J, Horn D, Thomas J, Lang M (2017) mlrMBO: A Modular Framework for Model-Based Optimization of Expensive Black-Box Functions. arXiv preprint arXiv:1703.03373.

link | pdf. - Horn D, Dagge M, Sun X, Bischl B (2017) First Investigations on Noisy Model-Based Multi-objective Optimization Evolutionary Multi-Criterion Optimization: 9th International Conference, EMO 2017, Münster, Germany, March 19-22, 2017, Proceedings, pp. 298–313. Springer International Publishing, Cham.

link|pdf. - Horn D, Bischl B, Demircioglu A, Glasmachers T, Wagner T, Weihs C (2017) Multi-objective selection of algorithm portfolios. Archives of Data Science.

link. - Kotthaus H, Richter J, Lang A, Thomas J, Bischl B, Marwedel P, Rahnenführer J, Lang M (2017) RAMBO: Resource-Aware Model-Based Optimization with Scheduling for Heterogeneous Runtimes and a Comparison with Asynchronous Model-Based Optimization International Conference on Learning and Intelligent Optimization, pp. 180–195. Springer.

link | pdf. - Horn D, Bischl B (2016) Multi-objective Parameter Configuration of Machine Learning Algorithms using Model-Based Optimization 2016 IEEE Symposium Series on Computational Intelligence (SSCI), pp. 1–8. IEEE.

link|pdf. - Bischl B, Kerschke P, Kotthoff L, Lindauer M, Malitsky Y, Frechétte A, Hoos H, Hutter F, Leyton-Brown K, Tierney K, Vanschoren J (2016) ASlib: A Benchmark Library for Algorithm Selection. Artificial Intelligence 237, 41–58.

link. - Demircioglu A, Horn D, Glasmachers T, Bischl B, Weihs C (2016) Fast model selection by limiting SVM training times.

link. - Degroote H, Bischl B, Kotthoff L, De Causmaecker P (2016) Reinforcement Learning for Automatic Online Algorithm Selection - an Empirical Study ITAT 2016 Proceedings, pp. 93–101. CEUR-WS.org.

link. - Degroote H, Bischl B, Kotthoff L, Causmaecker PD (2016) Reinforcement Learning for Automatic Online Algorithm Selection - an Empirical Study Proceedings of the 16th ITAT Conference Information Technologies - Applications and Theory, Tatranské Matliare, Slovakia, September 15-19, 2016., pp. 93–101.

link. - Mantovani RG, Rossi ALD, Vanschoren J, Bischl B, Carvalho ACPLF (2015) To tune or not to tune: Recommending when to adjust SVM hyper-parameters via meta-learning 2015 International Joint Conference on Neural Networks (IJCNN), pp. 1–8.

link|pdf. - Bossek J, Bischl B, Wagner T, Rudolph G (2015) Learning feature-parameter mappings for parameter tuning via the profile expected improvement Proceedings of the 2015 Annual Conference on Genetic and Evolutionary Computation, pp. 1319–1326. Association for Computing Machinery.

link|pdf. - Brockhoff D, Bischl B, Wagner T (2015) The Impact of Initial Designs on the Performance of MATSuMoTo on the Noiseless BBOB-2015 Testbed: A Preliminary Study Proceedings of the Companion Publication of the 2015 Annual Conference on Genetic and Evolutionary Computation, pp. 1159–1166. Association for Computing Machinery, Madrid, Spain.

link|pdf. - Horn D, Wagner T, Biermann D, Weihs C, Bischl B (2015) Model-Based Multi-Objective Optimization: Taxonomy, Multi-Point Proposal, Toolbox and Benchmark. In: In: Gaspar-Cunha A , In: Henggeler Antunes C , In: Coello CC (eds) Evolutionary Multi-Criterion Optimization (EMO), pp. 64–78. Springer.

link|pdf. - Lang M, Kotthaus H, Marwedel P, Weihs C, Rahnenführer J, Bischl B (2015) Automatic model selection for high-dimensional survival analysis. Journal of Statistical Computation and Simulation 85, 62–76.

link|pdf. - Bischl B (2015) Applying Model-Based Optimization to Hyperparameter Optimization in Machine Learning Proceedings of the 2015 International Conference on Meta-Learning and Algorithm Selection - Volume 1455, p. 1. CEUR-WS.org, Aachen, DEU.

link|pdf. - Mersmann O, Preuss M, Trautmann H, Bischl B, Weihs C (2015) Analyzing the BBOB Results by Means of Benchmarking Concepts. Evolutionary Computation Journal 23, 161–185.

link|pdf. - Bischl B, Wessing S, Bauer N, Friedrichs K, Weihs C (2014) MOI-MBO: Multiobjective Infill for Parallel Model-Based Optimization. In: In: Pardalos PM , In: Resende MGC , In: Vogiatzis C , In: Walteros JL (eds) Learning and Intelligent Optimization, pp. 173–186. Springer.

link | pdf. - Kerschke P, Preuss M, Hernández C, Schütze O, Sun J-Q, Grimme C, Rudolph G, Bischl B, Trautmann H (2014) Cell Mapping Techniques for Exploratory Landscape Analysis Proceedings of the EVOLVE 2014: A Bridge between Probability, Set Oriented Numerics, and Evolutionary Computation, pp. 115–131. Springer.

link | pdf. - Vatolkin I, Bischl B, Rudolph G, Weihs C (2014) Statistical Comparison of Classifiers for Multi-objective Feature Selection in Instrument Recognition. In: In: Spiliopoulou M , In: Schmidt-Thieme L , In: Janning R (eds) Data Analysis, Machine Learning and Knowledge Discovery, pp. 171–178. Springer.

link | pdf. - Hess S, Wagner T, Bischl B (2013) PROGRESS: Progressive Reinforcement-Learning-Based Surrogate Selection. In: In: Nicosia G , In: Pardalos P (eds) Learning and Intelligent Optimization, pp. 110–124. Springer.

link | pdf. - Mersmann O, Bischl B, Trautmann H, Wagner M, Bossek J, Neumann F (2013) A novel feature-based approach to characterize algorithm performance for the traveling salesperson problem. Annals of Mathematics and Artificial Intelligence 69, 151–182.

link | < pdf. - Bischl B, Mersmann O, Trautmann H, Preuss M (2012) Algorithm Selection Based on Exploratory Landscape Analysis and Cost-Sensitive Learning Proceedings of the 14th Annual Conference on Genetic and Evolutionary Computation, pp. 313–320.

link | pdf. - Koch P, Bischl B, Flasch O, Bartz-Beielstein T, Weihs C, Konen W (2012) Tuning and evolution of support vector kernels. Evolutionary Intelligence 5, 153–170.

link | pdf. - Mersmann O, Bischl B, Bossek J, Trautmann H, M. W, Neumann F (2012) Local Search and the Traveling Salesman Problem: A Feature-Based Characterization of Problem Hardness Learning and Intelligent Optimization Conference (LION), pp. 115–129. Springer Berlin Heidelberg, Berlin, Heidelberg.

link | pdf. - Bischl B, Mersmann O, Trautmann H, Weihs C (2012) Resampling Methods for Meta-Model Validation with Recommendations for Evolutionary Computation. Evolutionary Computation 20, 249–275.

link | pdf. - Mersmann O, Bischl B, Trautmann H, Preuss M, Weihs C, Rudolph G (2011) Exploratory Landscape Analysis. In: In: Krasnogor N (ed) Proceedings of the 13th annual conference on genetic and evolutionary computation (GECCO ’11), pp. 829–836. Association for Computing Machinery, New York, NY, USA.

link. - Koch P, Bischl B, Flasch O, Bartz-Beielstein T, Konen W (2011) On the Tuning and Evolution of Support Vector Kernels. Research Center CIOP (Computational Intelligence, Optimization and Data Mining), Cologne University of Applied Science, Faculty of Computer Science and Engineering Science

link. - Bischl B, Mersmann O, Trautmann H (2010) Resampling Methods in Model Validation. In: In: Bartz-Beielstein T , In: Chiarandini M , In: Paquete L , In: Preuss M (eds) WEMACS – Proceedings of the Workshop on Experimental Methods for the Assessment of Computational Systems, Technical Report TR 10-2-007, Department of Computer Science, TU Dortmund University.

link. - Richter J, Kotthaus H, Bischl B, Marwedel P, Rahnenführer J, Lang M (2016) Faster Model-Based Optimization through Resource-Aware Scheduling Strategies Proceedings of the 10th Learning and Intelligent OptimizatioN Conference (LION 10), Ischia Island (Napoli), Italy.

link|pdf.